From Green Screens to Game Engines

The Limitations of Traditional Chroma Key

Before digital LED stages became mainstream, filmmaking relied heavily on green-screen chroma key—a staple since Superman (1978) and refined through the CG boom of the early 2000s. Yet despite its ubiquity, chroma key has critical drawbacks:

- Post-production dependence & cost: VFX houses must meticulously rotoscope reflections, especially in shiny surfaces—costing time and money. For instance, The Mandalorian‘s reflective beskar armor caused significant post work that LED panels would later eliminate altogether.

- Disembodied acting experience: Actors perform in a void; directors rely on imagination rather than immersive locations, slowing emotional believability and performance nuance.

- Lighting mismatches & spill: Artificial lighting often fails to perfectly match the intended environment. Green spill can degrade image quality and require additional color correction passes.

- Constant reshoots: Any change to background or lighting often triggers costly reshoots or total VFX re-dos—impacting tight production schedules.

These factors contribute to high budgets and long lead times. A Wall Street Journal analysis estimated that TV spots using virtual production can be 30–40% cheaper than traditional shoots using green-screen-heavy VFX. This discrepancy explains why many producers are strategically shifting to LED volume solutions.

The Mandalorian Effect: Why Everyone’s Talking About LED Walls

The shift to LED volumes really took off with The Mandalorian (2019), produced on ILM’s “StageCraft” LED Stage, known as “The Volume.” This breakthrough introduced:

- Real-time parallax & in-camera VFX: Wrapped by a 270° curved video wall (and overhead LED ceiling), the Volume used Unreal Engine to render real-time CG environments which moved dynamically with camera tracking—creating accurate parallax and realistic reflections, even on Mando’s armor.

- Accelerated shoot schedules: Favreau confirmed that finishing production on The Mandalorian using the Volume cut overall shoot time by nearly half compared to on-location shooting.

- Improved performance space: Actors reacted emotionally to real landscapes and lighting, not just blurs on a green screen—leading to more authentic performances.

- Scalable deployment: ILM now operates multi-memory volume stages—Los Angeles, London, Vancouver—with portable pop-up volumes bringable to pop-up sets worldwide.

Usage stats highlight the momentum:

- In 2019, ILM launched the first three StageCraft volumes.

- By October 2022, global LED volume studios reached ~300 worldwide, up from just 3 in 2019.

- The global virtual production market, valued at $2.1B in 2023, is projected to grow to $6.8B by 2030 (CAGR 18%).

All of this makes clear why The Mandalorian isn’t just a hit—it’s a revolution. Up next, we’ll break down how the LED Volume works, the tech it relies on, and how game engines like Unreal are reshaping the modern filmmaking toolkit.

What Is Virtual Production? The Tools Behind the Trend

LED Volumes: What They Are and How They Work

Think of an LED volume as a giant, high-resolution, 360° digital backdrop. Composed of seamlessly tiled LED panels (and sometimes overhead “sky” ceilings), the volume displays live-rendered CG environments in real time—essentially acting like a colossal immersive TV screen that wraps around the actors and set.

Key components include:

- LED walls & ceilings: Built from linked panels ranging from 10 mm to 2 mm pitch, designed for ultra-high resolution and color consistency.

- Camera tracking: Infrared markers on the camera rig sync movement with the game engine, enabling parallax accuracy and in-camera background adjustments as the camera moves.

- Sync hardware: High-speed frame sync systems ensure the LED content, camera shutter, and engine-render frames align within milliseconds, preventing lag or tearing.

- Integrated lighting: The LEDs themselves cast realistic ambient light onto subjects and sets, providing natural reflections, interactive shadows, and consistent dynamic lighting.

This setup removes typical green-screen pain points—no rotoscoping, no mismatched composites—and lets teams capture final pixels live on set.

Game Engines in Filmmaking: Unreal Engine, Unity, and Beyond

Game engines are the brains powering LED volumes. Consider:

- Unreal Engine: The industry leader—UE5 supports high-fidelity Lumen lighting and Nanite geometry, enabling photoreal environments in-camera. A recent study shows UE5 pushing 43 fps in 4K real-time renders, outperforming Unity’s 28 fps in comparable tasks. Epic reports over 300 virtual-production stages using UE as of late 2022, compared to fewer than 12 in 2020.

- Unity: Known for accessible workflows and strong AR/VR integration. Widely used by indie creators and for commercials—its Cinemachine tool supports virtual cinematography.

- Proprietary engines: Studios like ILM build custom renderers (e.g., Helios for StageCraft) tuned for ultra-low-latency requirements.

- Emerging solutions: Tools like Aximmetry or Antilatency become more popular in Europe/Indie Virtual Production pipelines.

Real-Time Rendering vs. Post-Render: A Pipeline Comparison

| Workflow Stage | Traditional Chroma Key | LED Volume (In-Camera VFX) |

|---|

| Environment capture | On-location filming or static backplates; later keyed and composited | Real-time 3D environment rendered live in on-set game engine |

| Camera tracking | Manual matchmove in post-production | Automated, with in-camera sync via tracking and real-time parallax |

| Lighting & reflections | Added in post; requires separate passes and refinement | Interactive ambient and specular lighting achieved IDirectly via LED |

| Post-production time | Weeks to months depending on scale | Minimal; often limited to editing and grade |

| Creative flexibility | Rigid—added VFX or set changes require expensive reshoots | Dynamic—environments can be updated instantly on set |

Real-time render capability is shifting heavy VFX workloads to production time rather than post-production. According to Autodesk/VFX Voice, this integrated pipeline “starts in pre-production and carries through to post,” with captured digital assets usable at every stage.

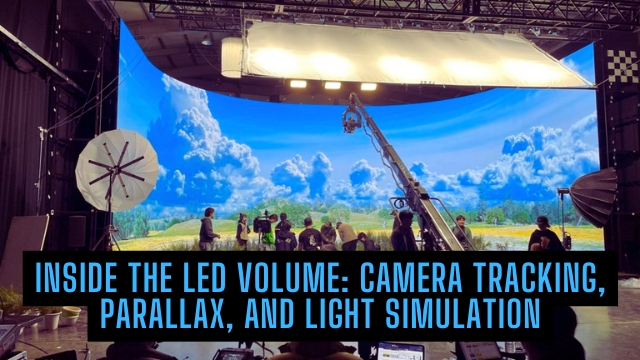

Inside the LED Volume: Camera Tracking, Parallax, and Light Simulation

How Real-Time Parallax Preserves Spatial Accuracy

Camera tracking is the heart of virtual production. Without precise position and lens data, the virtual world would fail to shift with the camera—breaking immersion. Systems like Ncam, Mo‑Sys StarTracker, and Vicon Active Crown deliver sub-millimeter accuracy and sub-5ms latency—essential for maintaining live parallax and perspective consistency.

Real-time tracking enables the virtual environment to shift naturally as the lens moves. That’s why ILM’s StageCraft consistently nails perspective—even during extreme crane or steadicam moves. . Framing and camera movement can be planned live inside Unreal or Unity, letting cinematographers block shots in-camera—not guess through post.

Volumetric Lighting and Dynamic Reflections in Real Time

One of the LED volume’s most praised advantages is its ability to light actors and sets interactively. The LED backdrop serves as both environment and light source—rapidly evolving during camera movement. A key benchmark occurred during a dusk sequence reproduction: a test team recreated candlelit outdoor ambiance inside an LED volume, achieving indistinguishable light tones and flicker effects compared to real footage.

Game engines like Unreal leverage screen-space reflections and planar reflections, syncing specular highlights instantly on lenses, wet roads, and reflective props. . Cinematographers have since noted a “new freedom” to move the camera within 2m of LED surfaces without losing realism.

Integrating Practical and Digital Elements on Set

LED volumes are often hybrid stages—where digital merges with practical. A physical prop can be placed directly within the volume, with interactive LED lighting matching color, shadow, and reflection in real time. Directors like Greig Fraser (The Batman) and Janusz Kaminski (The Fabelmans) have praised this combination for maintaining performance continuity and visual fidelity.

Engineers rely on nDisplay (Unreal’s multi-display synchronizer) and Live Link protocols to feed lens metadata and timecode into the engine, aligning camera frustums with LED output. This in-camera realism is a game-changer—no more waiting months for VFX to light a scene.

Budgeting and Timelines — The Real Costs (and Savings) of Virtual Sets

CapEx vs. OpEx: Renting LED Volume Stages vs. Building In-House

Adopting LED volume technology presents a key financial decision: build your own stage or rent existing ones?

- Building a full-scale volume (e.g., 80-ft diameter, 30-ft tall) typically costs US $8–16 million for hardware alone—LED panels, tracking systems, sync hardware, servers—before labor. Add installation, maintenance, and software, and you’re looking at the high-nine-figure range—and still more for upkeep.

- Medium-sized volumes cost around $50k–100k, while compact volumes (small-scale pin-up theaters) can be set up for $10k–30k.

- Renting: Daily LED volume rental rates vary by region—from $800/day at boutique studios (Golden Hour) to $5k–16k/day in larger markets like China.

Consider this: Unless you’re on a high-volume schedule, rental often outpaces CapEx in total cost. However, owning gives absolute control, long-term cost reduction for multi-show studios, and scheduling sovereignty. Indie teams can start small—build a micro-stage for under $500k—and scale as demand grows.

Case Study: Disney’s The Mandalorian vs. Indie Studio Applications

Disney/ILM – The Mandalorian

- Accelerated output: Episodes shot 30–50% faster than comparable green-screen productions thanks to real-time visual feedback and instant environment changes.

- Travel and location reduction: By simulating environments digitally, the crew avoided travel-related disruption, saving time and reducing carbon footprint.

- Attained cinematic polish: Reflections on shiny surfaces (like Beskar armor) were seamlessly captured in-camera, reducing VFX overhead.

Indie & Mid-Tier Applications

- Micro-stage boom: Smaller volumes support tight scenes like dialogue setups or product shoots—enough quality for 4K capture and quick live compositing—at just a fraction of big-budget scale.

- Modular rentals: Many rental houses offer Unreal-integrated volumes with tracking for $800/day, enabling short-term access to high-end tech.

- Remote asset builds: Indie companies are having locations 3D-scanned remotely, then seamlessly integrated into rented volumes, reducing crew travel and setup logistics.

Reducing Reshoots, Travel, and Postproduction Dependencies

Virtual production drastically cuts inefficiencies that blow budgets:

- Reshoots: With live frame revision, the cost and risk of post-production picks or A/B lighting re-dos drop dramatically. One study showed virtual production stages slashed reshoot costs by up to 40%.

- Travel & logistics: Virtual environments let crews “shoot” forests, deserts, or alien worlds from studio—avoiding location scouting delays, weather disruptions, and travel costs.

- Post-production savings: On-set VFX capture replaces weeks of compositing, reducing post time by nearly 50%—translating to significant salary and scheduling savings.

Summary: Financial Considerations at a Glance

| Factor | Green-Screen Workflow | LED Volume Workflow |

|---|---|---|

| Infrastructure Cost | Low to moderate (greenscreen rental) | High CapEx or daily rental costs |

| Schedule Efficiency | Standard; potential delays from reshoots | 30–50% faster shoots (e.g. Mandalorian) |

| Reshoot & VFX Cost | High post-production expense | Immediate correction, minimal rework |

| Travel & Location Costs | High—permits, transport, lodging | Low—vast environments recreated digitally |

| Creative Flexibility | Limited on-set feedback | Real-time environment changes empower creativity |

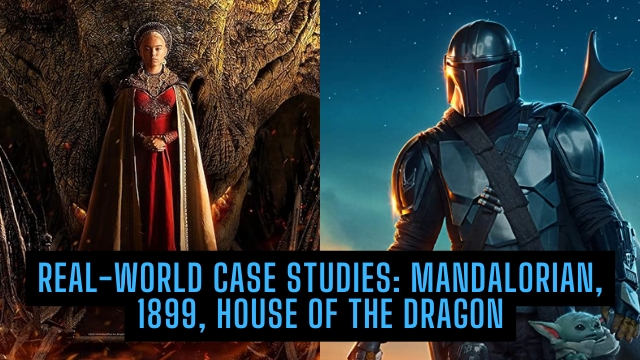

Real‑World Case Studies: Mandalorian, 1899, House of the Dragon

How StageCraft by ILM Changed the Game

ILM’s StageCraft platform revolutionized the industry with The Mandalorian (2019), introducing in-camera VFX via a 270° LED volume and Unreal Engine integration. Here’s what it enabled:

- Efficiency & Speed: Over 50% of Season 1 was filmed inside the volume, cutting location needs and enabling faster shoots. Jon Favreau noted Season 1 produced a full eight-episode run in under two years—a feat seldom matched in episodic TV.

- Live-Capture Lighting: Beskar armor reflections and ambient lighting were captured on set—no post VFX required.

- Flexibility in Production: Directors could swap environments quickly (“from desert to moon base”) within minutes—empowering dynamic storytelling.

- Scalability: ILM now runs major volume stages globally (LA, London, Vancouver), plus deployable pop‑ups—spreading the tech beyond initial use cases.

StageCraft earned an Engineering Emmy in July 2022, cementing its status as a production milestone reshaping modern cinematography.

Netflix’s 1899 and the Virtual Soundstage Revolution

Netflix’s multilingual thriller 1899 (2022) took LED volume adoption further:

- Scale and Integration: At Studio Babelsberg, cinematographers Nik Summerer and crew used ARRI rigs to operate within an expansive LED volume built by ARRI—complete with domed skylight and 270° wraparound environment.

- In-Camera Effects: Framestore’s supervision enabled real-time environments, live ocean surfaces, and natural lighting for atmospheric, mood-driven scenes like the ship’s dining hall.

- Pandemic-Prompted Innovation: With travel restrictions, producers avoided shipboard shoots entirely—virtual stages stood in for complex maritime environments.

- Creative Control: Filmmakers noted “it’s definitely not easier, really—but you can create huge worlds with it”, reflecting steep learning curves and high rewards.

Lessons from HBO’s Use of LED Volumes on House of the Dragon

HBO’s House of the Dragon (2022‑) brought StageCraft to Leavesden Studios, establishing a permanent VP stage for high-end episodic drama.

- Final Pixel Capture: With real-time lighting and visuals, the show captures “final pixel” shots—minimizing VFX overlays in post.

- High Production Value, Lower Risk: At approx. $20M/episode, Dragon needed cinematic quality without runaway green-screen costs . LED stages allowed immersive set pieces and lighting without logistical overhead.

- Motion-Control Advantages: Dragon-rider plates used tech-vis and motion-control rigs linked to the volume—delivering complex, realistic camera moves integrated with CG dragon footage.

- Growing Pains: Reports from The Hollywood Reporter flagged calibration challenges: moiré patterns, syncing overhead LED ceilings, and training crews to perform with live CG lighting—all part of scaling VP in large TV environments.

Virtual Production for Indies — Is It Accessible Yet?

Portable LED Walls and Budget Solutions

Indie filmmakers no longer need to invest millions in massive LED volumes. Compact units—often called “micro-stages”—are emerging at price points between $100,000 and $250,000, significantly lowering the barrier to entry. For example:

The Vu One Mini offers a 12×7 ft LED wall with built-in tracking, lighting sync, and media server for ~$99K—designed to fit small studios, educational labs, or even corporate shoots.

Rentable mobile volume systems like Varyostage offer versions under 100 sq m for short-term use—reducing infrastructure footprint while still delivering live parallax and ambient lighting.

On reddit’s r/virtualproduction, indie creators report achieving impactful “virtual production” on shoestring budgets—using consumer-grade cameras, green screens, and open-source Unreal setups. One user emphasized:

“Startup costs from 500k–1MM for an LED wall…Or use any secondary monitor and LiveLink to Unreal”—suggesting even sub-$20K setups can kickstart VP learning curves.

Cloud-Based Virtual Set Environments

Beyond physical LED, cloud-powered environments are simplifying VP workflows. Using Unreal Engine’s Virtual Studio or Vu Studio (bundled with the One Mini), creators can:

- Collaborate remotely—allowing art departments and directors in different locations to co-build sets.

- Assemble real-time environments, lighting, and camera layout in the cloud.

- Stream scenes directly to LED stages via media servers.

Filmmaker Magazine notes that entry-level previz runs on modest hardware—even M1 MacBooks—and costs just a few hundred dollars in asset purchases. Cloud rendering makes it even more scalable: you design once, render many, and deploy across LED walls anywhere.

Freelance-Friendly Virtual Art Departments

The rise of VP has also democratized the art department:

- A growing market of freelance VP specialists now offers modular services—scout online, build sets in Unreal, and deliver turnkey LED sequences.

- On-set “techvis” support—typically only available at ILM—is increasingly offered on-demand for indie shoots, either virtually or with small deployable teams.

- Distributed art departments can co-create with Directors via VR scouting tools. ILM’s Virtual Art Department pioneered this approach during the pandemic, showing feasibility even on remote projects.

Digital marketplaces also help indie teams access assets: Unreal Marketplace, Megascans, and Vu’s Virtual Studio offer pre-built environments costing as little as $40 for full scene kits.

Summary: Indie Virtual Production Is Now Viable

| Category | Traditional LED Volume | Indie VP Setup |

|---|---|---|

| Scale | Full 80-ft volume | Compact 12×7 ft walls (Vu One Mini) |

| Upfront Cost | $8–16M for full build | Sub-$100K purchase or $1–5K/day rentals |

| Setup Complexity | Full crew, tracking, integration | Plug-and-play units or modular rentals |

| Team | In-house VFX, tracking tech, crew | Freelancers, remote art teams, script-to-screen collaborations |

| Asset Access | Custom-built environments | Prebuilt marketplaces; low-cost asset libraries |

Indie creators are increasingly leveraging these tools to produce virtual-content films and series without the Hollywood price tag. Previs leads the charge—letting storytellers visualize scenes early and build confidence before committing to LED shoot days.

How Virtual Production Impacts Creative Possibilities

Shooting Magic Hour All Day Long

One of the most transformative creative advantages of virtual production is the ability to capture “magic hour” (that soft, cinematic twilight lighting) indefinitely. Up to 4 “locations” can be shot in a single day, with adjustable sun positioning, weather, and ambient effects—all while maintaining consistent color and atmosphere.

A recent test by a mobile VP provider (Magicbox) demonstrated over 60% reduction in carbon emissions by eliminating travel and repeated location setups, all while crafting continuous “premium light” scenes.

Directors gain full creative control over lighting, time-of-day, and atmospheric tones—without the constraints of the sun or schedule.

Previs as Production: Moving Creative Decisions Upstream

In virtual production pipelines, previs becomes part of actual production. Engineers like Zach Alexander of Lux Machina highlight: “Every hour of pre-production is worth two hours of production”. This isn’t mere planning—it’s active, live content creation:

- Pitchvis and techvis steps produce camera-ready animations that become final assets.

- Tools like Cine Tracer allow directors and DPs to traverse a 3D scene, adjust lights, cameras, and lenses in real time before shooting begins, then replicate those setups directly on volume.

- Real-time previz democratizes creative input—art directors, cinematographers, and VFX teams can collaborate synchronously, reducing miscommunication and rework.

This shift meshes pre-production and principal photography into one cohesive phase, slashing iteration cycles and fostering spontaneity.

Collaboration Across Departments in Pre-Render

Virtual production breaks down departmental silos by enabling shared creative and technical workflows:

- Directors, cinematographers, VFX supervisors, and production designers can virtually walk through scenes via VR scouting or remote techvis sessions, refining camera placement and lighting presets before the actors arrive.

- Live Link and nDisplay protocols sync lens metadata, lighting setups, and engine output with LED volumes—ensuring what’s designed in pre-render is captured live on set later.

- As MASV notes, “fix it in pre” replaces the old creative mantra of “fix it in post,” making on-the-fly adjustments collaborative and immediate.

This integrative approach boosts efficiency and ensures creative consistency across all phases of production.

Workflow Considerations and Pitfalls

Sync Issues Between Engine and Camera

Synchronization is mission-critical for virtual production; even slight delays can shatter the illusion. Systems like Mo‑Sys VP Pro XR or StageCraft demand tight integration via genlock—aligning camera shutter, LED media servers, tracking, and engine rendering. Without this, issues like flicker, rolling bands, or background lag occur.

Latency remains a challenge: Unreal Engine stages aim for 7–8 frames total delay (~1/3 sec). The Mandalorian noted an 8-frame lag, still manageable, but faster motions—whip pans or steadicam pushes—can expose lag-induced stutter.

Multi-camera setups complicate matters: only one camera view can render at a time. Switching between cameras may render stale frames for up to 5–6 frames, forcing creative restrictions unless custom systems are deployed.

Bottom line: Racetrack-level latency tolerance requires powerful sync hardware, tight frame budgets, and workflow discipline—more so than green-screen shoots.

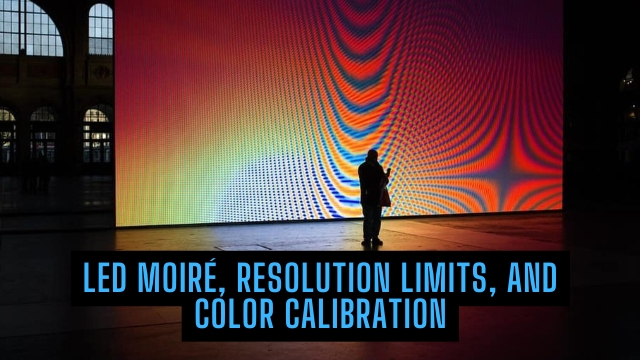

LED Moiré, Resolution Limits, and Color Calibration

Moiré interference arises when LED pixel pitch overlaps camera sensor resolution. Despite high-end cameras like RED Komodo, patterns can bounce in and out depending on lens and focus—sometimes solvable only through optical low-pass filters or shifting distance/focus.

Color fidelity issues stem from LED pixels’ narrow-band RGB LEDs. Skin tones and costume colors may shift in-camera due to metamerism—a mismatch between display lighting and standard HMI sources. Netflix’s Production Guide flags color shift and banding as common issues.

Calibration is vital: Advanced workflows calibrate LED panels to camera sensors. Research (e.g., from Paul Debevec’s team) uses per-camera linear color transforms to reconcile live background and subject illumination, reducing skin-tone anomalies.

Training Crews for Game Engine Cinematography

Virtual production demands new crew roles and skillsets:

- VP Supervisors/Techvis Leads bridge DPs and engine teams—ensuring tracking, lighting, and lens metadata sync with Unreal or Unity assets.

- VP Camera Operators need fluency in virtual lens options and live Game Engine interfaces, tweaking focal length or DOF from game-engine menus—for instance, mimicking anamorphic workflows in Unreal.

- Multi-camera coordination: VP teams must plan for camera switches, frame latency, and viewport handoffs—traditionally, single-camera exercises.

- Cross-disciplinary communication: Game-engine artists, lighting techs, and cinematographers must work in real time—jointly. “Virtual production requires a shift in mindset,” says Netflix broadcast lead Adam Callaway.

- Comprehensive testing protocols: Pre-shoot checklists must include moiré testing, color consistency, latency trials, and alignment between practical lighting and virtual skies—broken setups mean on-set failure.

The Future of LED Stages — Standard or Specialized?

Will Every Studio Need a Volume Stage?

LED volumes are rapidly transitioning from niche technology to fundamental infrastructure. According to Futuresource Consulting, approximately 200 LED volume stages were operational globally by the end of 2023—an indication that these are becoming staples in soundstage–capable facilities.

Futuresource and Deloitte reports suggest virtual production will increasingly become standard practice in content creation—spanning high-end features, episodic TV, and even news and sports broadcasts. . Studios like Trilith in Georgia already feature LED-ready stages by default, not luxury upgrades.

That said, VP remains capital-intensive and requires technical depth—some studios will likely opt for shared volumetric hubs or rental partnerships over building their own. On the horizon, studios may adopt more modular LED setups—portable volumes that can be plugged into existing stages rather than purpose-built spaces.

Cross-Industry Impact: Commercials, Music Videos, and Live Events

LED volumes are crossing over into other industries:

- Advertising and commercials: Live Driven’s LED stages are now used to shoot complex product ads—integrating in-camera VFX with interactive lighting—reducing post-production by up to 40%.

- Live music tours & events: Disguise technology—previously used for U2, Taylor Swift, and MSNBC—has been redeployed for film shoots like Mr. & Mrs. Smith. Hybrid AR concerts are rising; Coachella’s Flume show used Unreal Engine to project AR visuals into the livestream, merging real and virtual audiences.

- Broadcast & sports: XR studios and LED walls are now integrated into daily production, enabling real-time graphics—weather, sports stats, elections—while elevating content quality and efficiency.

LED volumes are thus becoming transformational in music, advertising, and live broadcast sectors—not just film and TV.

The Convergence of AR, XR, and VP

Virtual production is merging with augmented and extended reality:

- XR (Extended Reality) studios overlay virtual sets atop live-action environments. The concept of “immersive volume” expands virtual production to include AR overlays, holographic stage extensions, and interactive performances.

- Disguise’s recent roadmap envisions AI-driven content creators will soon be able to “type ‘white chair’ and have it appear” instantly through integrated Getty-aligned generative AI pipelines. This hints at rapid prototyping and asset creation.

- AR streaming events—like Coachella’s virtual parrots for livestream viewers—point to a hybrid spectator model combining LED walls, on-site live elements, and digital augmentation for remote viewers.

- The “digital twin” concept—used in live events for venue mapping—is being adopted for future studio design, enabling remote scouting, testing, and real-time environment adaptation across VP workflows.

Summary & Outlook

| Trend | Direction & Impact |

|---|

| Volume ubiquity | Hundreds globally, more facilities LED-ready by default |

| Cross-sector adaptation | Advertising, live events, broadcast—VP is expanding beyond film/TV |

| Hybrid AR/XR integration | AR overlays on LED, AI asset generation, immersive live streams |

| Digital twin innovation | Remote techvis and environment modeling join VP pipelines for pre/post cohesion |

LED volume stages are shifting from high-end novelty to core infrastructure—and they’re evolving further into hybrid platforms that merge film, broadcast, concerts, and virtual experiences. The future will be less about whether studios adopt VP and more about how they integrate it into multi-disciplinary creation ecosystems.

A New Language of Filmmaking

The rise of LED volumes and virtual production marks more than just a technological evolution—it signifies a complete redefinition of cinematic language. The way we plan, light, shoot, and post-produce content is transforming at every layer, shifting creative decision-making upstream and fostering deeper collaboration between traditionally siloed departments.

Directors no longer imagine a scene and wait months for it to materialize. They now stand on a soundstage, immersed in a live, photoreal world rendered in real time—reacting, revising, and experimenting with full visual context. Cinematographers light digital sunsets at 11 a.m. Script notes now trigger immediate set changes, not downstream VFX overhauls. Editors and colorists inherit pixel-perfect footage captured with intention, not guesswork.

Virtual production, once a curiosity seen in behind-the-scenes reels, is rapidly becoming a central pillar of modern filmmaking—accessible not just to studios like Disney and Netflix, but increasingly to nimble indie teams armed with affordable LED walls, cloud-based tools, and game engine fluency.

It isn’t just about hardware. It’s about mindset. Virtual production demands new roles, new workflows, and new literacy in real-time thinking. It rewards pre-planning, technical integration, and cross-departmental empathy. And most of all, it gives storytellers new superpowers—compressing months of iteration into hours, and turning creative imagination into immediate visual truth.

This is not the end of traditional filmmaking, nor the replacement of practical craftsmanship. It’s the emergence of a hybrid craft: a “cinematic OS update” that layers digital flexibility atop tangible storytelling. Like the introduction of sound, color, or non-linear editing, virtual production isn’t a trend—it’s a new chapter.